Every now & then something new and exciting-looking comes along. And it isn’t always obvious, without looking into it dispassionately and in great detail, just how revolutionary it really is. Or isn’t.

Blockchain, for example, was supposed to solve all manner of problems. Many specialist blockchain companies sprang up. Some of them even still exist, but none of their investors are exactly retiring on the proceeds.

I wrote about blockchain hype back in 2019, and updated my predictions here in 2020.

Now the hype is all about AI. And don’t mis-understand, AI is exciting and can do many things. But it is important that we cut through the hype and actually look at what it CANNOT do well as well as at what it CAN do well. Getting to the bottom of this can be difficult. It takes time, and actual research[1]. In the real world of scientific research, one rarely gets the whole question answered in one go; the research chips away at areas of uncertainty.

It is in this spirit that I would like to draw some attention to a recent paper (October 2024) entitled “GSM-Symbolic: Understanding the Limitations of

Mathematical Reasoning in Large Language Models“. Whilst this paper looks at mathematical reasoning, it is easier to quantify this than more general reasoning. It’s an interesting and useful place to start, methinks. Mathematical reasoning is more structured and rules-constrained than more general forms of reasoning, and should be easier to achieve.

The paper is a fairly hefty 22 pages in length, with little waffle so quite dense. Just how I like them. Plenty of examples to guide you through the research and conclusions.

For the impatient, here’s the TL;DR: the key findings from the paper.

“Our findings reveal that LLMs exhibit noticeable variance when

responding to different instantiations of the same question. Specifically, the performance of all models declines when only the numerical values in the question are altered in the GSM-Symbolic

benchmark. Furthermore, we investigate the fragility of mathematical reasoning in these models and demonstrate that their performance significantly deteriorates as the number of clauses in a question increases. We hypothesize that this decline is due to the fact that current LLMs are not capable of genuine logical reasoning; instead, they attempt to replicate the reasoning

steps observed in their training data. When we add a single clause that appears relevant to the question, we observe significant performance drops (up to 65%) across all state-of-the-art models,

even though the added clause does not contribute to the reasoning chain needed to reach the final answer. Overall, our work provides a more nuanced understanding of LLMs’ capabilities and limitations in mathematical reasoning.”(Emphasis mine)

Whether current LLMs are genuinely capable of true logical reasoning

remains an important research focus. In my own opinion, the evidence for it is slim; it’s just probabilistic pattern-matching rather than formal reasoning. However, that’s just an opinion from my informal non-rigorous experiments with these models which focuses on finding tasks at which they perform badly or terribly. The science will, however, eventually provide actual evidence.

Conclusions

Understand what LLMs and AI can do, but appreciate its limitations too. Don’t listen to sales talk, or you’ll end up with the “AI Powered Pen” from above (where AI refers to the price which has been Artificially Inflated). There are many, many useful things which current LLMs & similar AIs can accomplish. But there are also plenty of tasks for which they are not suited at this time – and, maybe, ever. Time will tell, watch out for the actual science. Don’t fall for hype. This article started with some Latin, let’s finish with some too: Caveat emptor – Buyer beware!

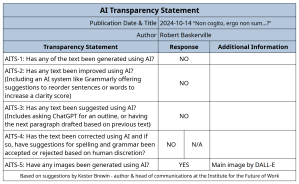

Footnotes & AI Transparency Statement

[1] That’s actual research – peer reviewed, repeatable, scientific-method-based research. Not “did my own research™️” by watching some youtube 5G-anti-vax-Covid-flat-earth-chemtrail-faked-moonlandings-black-helicopters-JFK-Elvis-Lord-Lucan-Freemasonry-Illuminati-false-flag-11th-September-Deep-State-QAnon-great-replacement-Aliens-Have-Taken-Over-The-Government-And-Only-I-Know conspiracy garbage. Just for the avoidance of all doubt.